The Evaluator-Optimizer Framework: How AI Learns to Perfect Itself

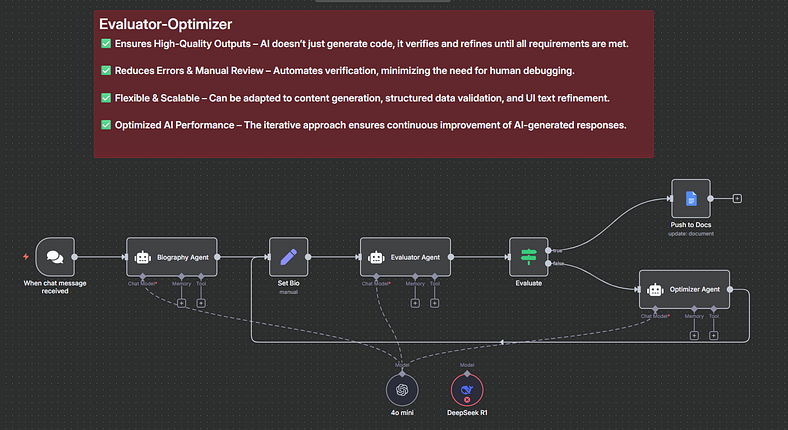

In the evolving world of AI agents, one of the most powerful patterns I’ve come across is the Evaluator-Optimizer Framework. It’s a self-improving loop where AI doesn’t just generate content — it critiques, revises, and refines it until it’s good enough to pass its own quality check.

Think of it as a human-in-the-loop system — but without the human.

How It Works

Generation (Creator Agent): Starts with the task (e.g., a short bio) and produces an initial draft from the given info.

Evaluation (Judge Agent): Checks the draft against set criteria (e.g., has a quote, is light and humorous, no emojis).

Optimization (Refiner Agent): Revises the draft using evaluator feedback, creating a loop of Generate → Evaluate → Optimize until approval.

Why This Matters

The benefits are massive:

Example in Action

We gave the system minimal input:

“Jon, 40, lives by the ocean.”

The loop worked its magic:

The result was a full biography: complete with life details, personality, quotes, and even a dad joke about fish. All from one sentence of input.

👉 Want to explore deeper discussions, workflows, and real-world use cases? Join us in the AI University Skool Community and be part of the growing network of AI builders.

Why I’m Excited

This framework feels like the future of self-correcting AI systems. Instead of relying on humans to check every piece of output, evaluators can act as internal quality control agents, while optimizers refine until perfection.

Imagine this applied to:

AI won’t just create — it’ll learn to meet the bar, every single time.

💡 Takeaway: The Evaluator-Optimizer Framework is a glimpse into autonomous, scalable quality assurance for AI. The best part? You can swap in different models at each stage — cheap ones for drafting, powerful ones for optimizing.

This is how AI gets smarter, faster, and more reliable.

👉 What’s one area in your workflow where you wish AI could “self-optimize” like this?