MCP Servers Demystified: How They’re Powering the Next Evolution of AI Agents

A few months ago, if you asked me about MCP servers, I would’ve probably shrugged. I’d seen the term everywhere — YouTube comments, Twitter threads, LinkedIn posts — but every explanation sounded different. Some were too technical, others too vague.

To be honest, it was intimidating.

But I knew one thing: MCP servers were going to matter. So I dug in. And what started as confusion eventually turned into clarity — and a working demo I ran live while self-hosting n8n.

This is the story of how I went from “What even is MCP?” to “Wow, this changes everything.”

Step 1: Understanding the Basics — LLMs & Agents

Let’s rewind.

At its core, a large language model (LLM) like ChatGPT is simple:

That’s it.

But then something changed. We gave LLMs tools.

Suddenly, they weren’t just answering questions — they were taking actions. Example: instead of just drafting an email, an AI agent could actually send that email through a Gmail API call.

This was the birth of the modern AI agent.

Step 2: The Problem With Tools

Tools worked great… to a point.

Each tool had a specific function:

An agent would decide which tool to use based on the request.

But here’s the catch:

That’s when I realized: tools alone wouldn’t cut it.

Step 3: Enter MCP Servers

This is where MCP servers clicked for me.

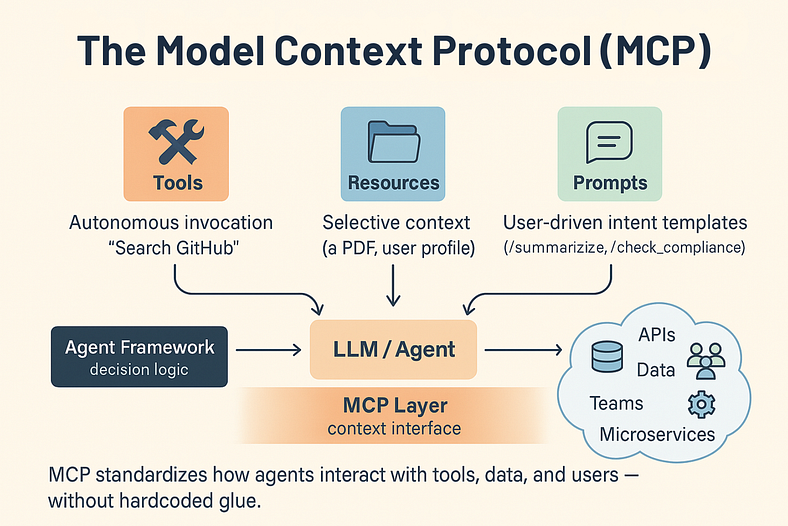

Think of an MCP server as a translator layer between your AI agent and the services you want to connect with (Notion, Airtable, Firecrawl, etc.).

Instead of hardcoding dozens of tools, the agent asks the MCP server:

“What actions do you support? What resources do you have? What’s the schema for using them?”

And the MCP server replies with a complete playbook:

The agent can then decide on the fly what to do — without you having to manually pre-build every tool.

Step 4: Seeing It in Action

To test this, I connected n8n to a Notion MCP server.

Suddenly, instead of juggling 7+ separate tools, the agent had access to 13 actions directly from Notion. And each action came with its schema.

Want to list records? Use listRecords with baseId, tableId, and filters. Want to create a record? Use createRecord with its schema.

No more guessing. No more manual configs. Just a clean conversation between the agent and the MCP server.

Step 5: A Real Demo

One of my favorite tests was with the Firecrawl MCP server.

I asked the agent:

“Extract the rewards program name from Chipotle.com.”

✅ “The rewards program is called Chipotle Rewards.”

Not perfect, but amazing to watch. The agent wasn’t just blindly failing — it was adapting, retrying, and problem-solving using the MCP server’s schemas.

Step 6: Why It Matters

Here’s why MCP servers are such a big deal:

In short: MCP servers make agents smarter, leaner, and infinitely more scalable.

Step 7: The Big Picture

Looking back, I realized I’d already been working with a similar concept in my “Ultimate Assistant” project.

I had a main agent and smaller “child agents” for Gmail, Calendar, Airtable, and content creation. Each child knew its tools and parameters.

That setup worked — but MCP servers make it even more powerful by removing the manual upkeep.

Now, I don’t just have tools. I have a dynamic system of servers that update themselves.

Final Thoughts

At first, MCP servers felt like an abstract buzzword.

But once I saw them in action, the fog lifted.

They’re not just about connecting tools. They’re about giving AI agents a universal translator — a way to interact with any system, at scale, with clarity.

And the best part? This is only the beginning.

The future isn’t just about smarter AI models. It’s about giving those models the right protocols and servers to take action in the real world.

That’s what MCP unlocks.

💬 What’s the first workflow you’d give your MCP-powered agent?