I Asked 4 AI Tools to Clone My Website — Here’s What Actually Worked

A few days ago, I had a question stuck in my head: Can AI replicate a website from just a URL? No manual coding. No templates. Just a single prompt.

I decided to find out.

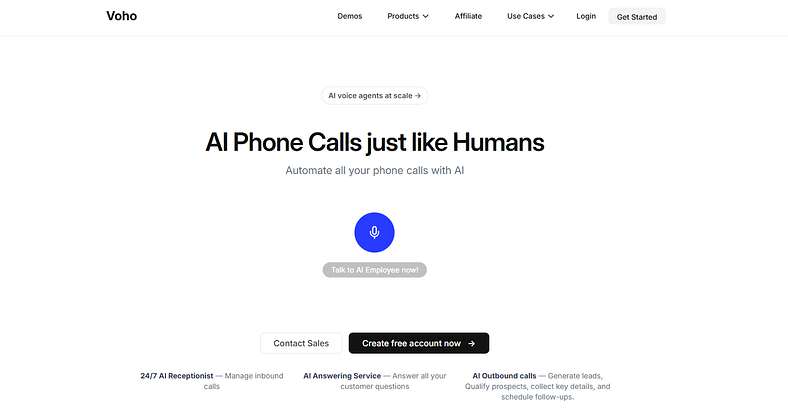

I picked my own website — VOHO.ai — and ran a live test across four different AI tools that promise instant website generation.

The tools I tested:

My goal was simple: Give each tool a URL and ask it to rebuild the website exactly as it appears, with no instructions or screenshots — unless absolutely required.

The Setup

I used the same prompt across all four platforms:

“Can you exactly duplicate this website? [URL]”

I didn’t mention frameworks, layout preferences, or visual styles. I wanted to see what each tool could do with as little direction as possible — just like a non-technical user would.

Quen: Fast, Simple, Surprisingly Good

The first tool I tried was Quen, a Chinese AI model by Alibaba.

It took less than a minute to generate a complete website. No screenshots needed. No errors. Just results.

When I checked the output, I was impressed. It had the same headline, layout structure, color scheme, and copy as my original VHO site. Of course, not every detail was exact — but it was a solid attempt.

Then I clicked “Deploy”.

The site went live in seconds. Clean, fast, and effortless.

Quen Score: 8/10 Fastest tool I tested. Could improve with better prompt understanding, but the baseline was strong.

V0: Developer-Friendly, But Slower Than Expected

Next up was V0, built by the team at Vercel.

This tool is known for its clean developer experience and use of modern frameworks like TSX.

It delivered a well-structured single-page version of my site. The accuracy was high — but the scope was limited. Only one page. And it took noticeably longer to deploy.

I expected more from a tool so widely used in the developer community.

V0 Score: 6/10 Good technical quality, but deployment speed and limited scope brought the score down.

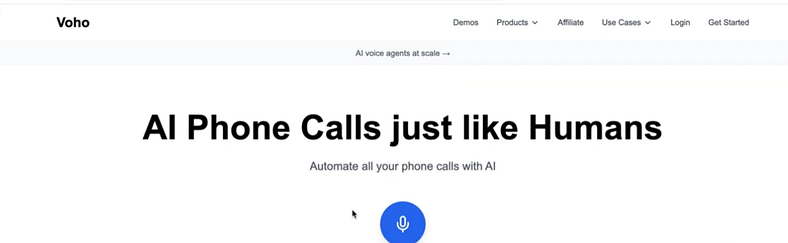

Bold: Most Accurate — With a Catch

Bold produced what was probably the most visually accurate version of my site.

There was a catch though: It wouldn’t generate anything until I uploaded screenshots of the original site.

Once I did, it delivered. The layout, fonts, visuals, and text closely matched my existing site — possibly better than any of the other tools. Deployment was smooth and fast.

But the need for visual input makes it slightly less “AI magic” and a bit more manual.

Bold Score: 8/10 Excellent accuracy — if you’re willing to do a bit more work upfront.

Lovable: Fast Output, Wrong Result

I had high hopes for Lovable. The interface was clean, and it processed the URL quickly.

But the website it generated didn’t resemble my site at all.

Different layout. Different color palette. Different text. It looked like it had pulled content from somewhere else entirely.

It did deploy the result successfully, which deserves some credit. But as for duplicating the original website, it missed the mark.

Lovable Score: 2/10 Functional, but inaccurate. Would not recommend based on this test alone.

Post-Deployment Adjustments

Once all four sites were deployed, I revisited my initial scores.

What I Learned

This experiment gave me a clear sense of where AI website generation stands today.

What’s Next

In the next test, I’ll go deeper into prompt engineering. Instead of just providing a URL, I’ll test what happens when we guide the AI with structured instructions, screenshots, and goals.

The goal? Push these tools to their limits — and figure out how to build production-ready websites using AI alone.

Stay tuned.